I invent new words and phrases on a frequent basis (e.g wungus, tingerling, little bibble). I don’t know what any of these words mean, but they sound like they should mean something. Can AI help us solve this problem? Probably not, but we might as well try.

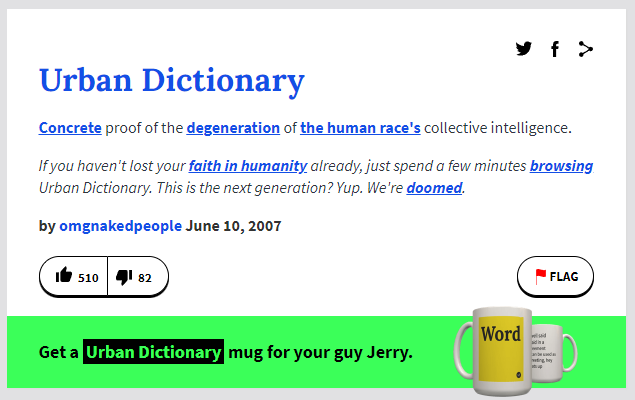

UrbanDictionary is a site that lets users share definitions for slang words on the internet. UrbanDictionary conveniently has a definition for itself:

The sheer quantity of definitions (along with this convenient dataset) made me wonder if a modern transformer-based NLP model could generate UrbanDictionary-style words of its own. Only one way to find out!

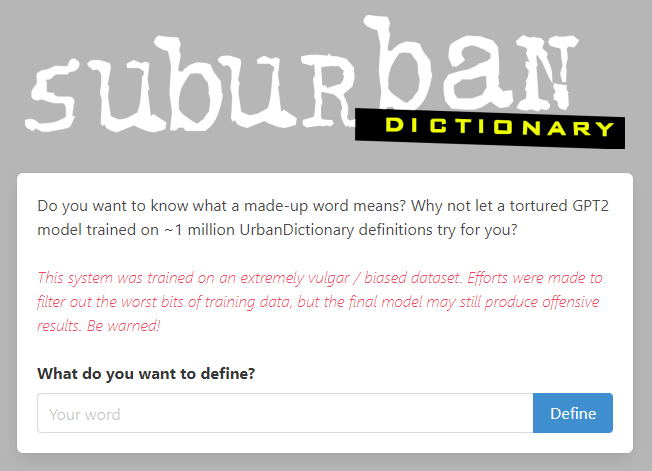

And so, SuburbanDictionary was born.

The actual model that powers this is a Huggingface transformers GPT2 model. Using free Google Colab TPUs (thanks Google!), I fine-tuned this model on around ~1 million word-definition pairs before deploying it on Huggingface’s hosted inference platform. All of the code involved is available on my Github.

The hardest part of training this network, by far, was the filtering. Most of the definitions on Urbandictionary are filled with misspellings and have inconsistant formatting; to make matters more annoying, many of the definitions in the text corpus were actually multiple definitions stuck together with inconsistent syntax. Undoing these mistakes took a hilariously large pile of hacky regex to fix.

More concerning than the mispellings were the number of downright offensive definitions. Filtering these out was almost impossible (especially since GPT-2 had already been trained on a public internet corpus), but I did my best to strip out definitions with offensive words and low ratings.

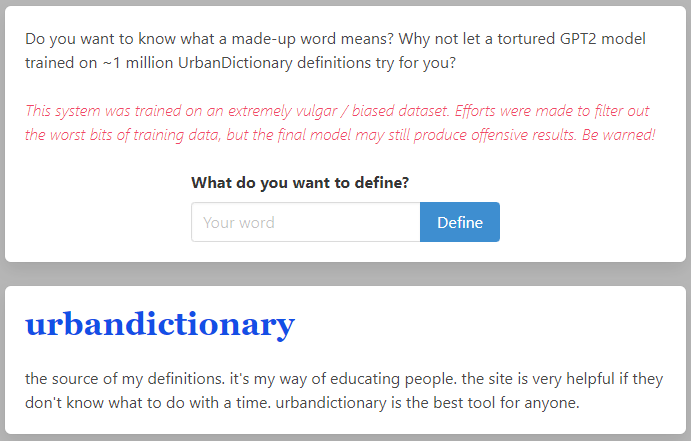

Hey, look! Suburban Dictionary is happier than its parents!